Earthaccess#

imported on: 2025-04-18

This notebook is from NSIDC's Using earthaccess to Search for, Access and Open ICESat-2 Data in the Cloud tutorial

The original source for this document is ICESAT-2HackWeek/ICESat-2-Hackweek-2023

Using earthaccess to Search for, Access and Open ICESat-2 Data in the Cloud#

Tutorial Overview#

This notebook demonstrates how to search for, directly access, and work with cloud-hosted ICESat-2 Land Ice Height (ATL06) granules from an Amazon Compute Cloud (EC2) instance using the earthaccess package. Data in the “NASA Earthdata Cloud” are stored in Amazon Web Services (AWS) Simple Storage Service (S3) Buckets. Direct Access is an efficient way to work with data stored in an S3 Bucket when you are working in the cloud. Cloud-hosted granules can be opened and loaded into memory without the need to download them first. This allows you take advantage of the scalability and power of cloud computing.

As an example data collection, we use ICESat-2 Land Ice Height (ATL06) over the Juneau Icefield, AK, for March 2003. ICESat-2 data granules, including ATL06, are stored in HDF5 format. We demonstrate how to open an HDF5 granule and access data variables using xarray. Land Ice Heights are then plotted using hvplot.

We use earthaccess, a package developed by Luis Lopez (NSIDC developer) to allow easy search of the NASA Common Metadata Repository (CMR) and download of NASA data collections. It can be used for programmatic search and access for both DAAC-hosted and cloud-hosted data. It manages authenticating using Earthdata Login credentials which are then used to obtain the S3 tokens that are needed for S3 direct access. earthaccess can be used to find and access both DAAC-hosted and cloud-hosted data in just three lines of code. See nsidc/earthaccess.

Learning Objectives#

In this tutorial you will learn:

how to use

earthaccessto search for ICESat-2 data using spatial and temporal filters and explore the search results;how to open data granules using direct access to the ICESat-2 S3 bucket;

how to load a HDF5 group into an

xarray.Dataset;how visualize the land ice heights using

hvplot.

Prerequisites#

The workflow described in this tutorial forms the initial steps of an Analysis in Place workflow that would be run on a AWS cloud compute resource. You will need:

a JupyterHub, such as CryoHub, or AWS EC2 instance in the us-west-2 region.

a NASA Earthdata Login. If you need to register for an Earthdata Login see the Getting an Earthdata Login section of the ICESat-2 Hackweek 2023 Jupyter Book.

A

.netrcfile, that contains your Earthdata Login credentials, in your home directory. See Configure Programmatic Access to NASA Servers to create a.netrcfile.

Credits#

This notebook is based on an NSIDC Data Tutorial originally created by Luis Lopez and Mikala Beig, NSIDC, modified by Andy Barrett, NSIDC, and updated by Jennifer Roebuck, NSIDC.

Computing Environment#

The tutorial uses python and requires the following packages:

earthaccess, which enables Earthdata Login authentication and retrieves AWS credentials; enables collection and granule searches; and S3 access;xarray, used to load data;hvplot, used to visualize land ice height data.

We are going to import the whole earthaccess package.

We will also import the whole xarray package but use a standard short name xr, using the import <package> as <short_name> syntax. We could use anything for a short name but xr is an accepted standard that most xarray users are familiar with.

We only need the xarray module from hvplot so we import that using the import <package>.<module> syntax.

# For searching and accessing NASA data

import earthaccess

# For reading data, analysis and plotting

import xarray as xr

import hvplot.xarray

import pprint # For nice printing of python objects

Authenticate#

The first step is to get the correct authentication to access cloud-hosted ICESat-2 data. This is all done through Earthdata Login. The login method also gets the correct AWS credentials.

Login requires your Earthdata Login username and password. The login method will automatically search for these credentials as environment variables or in a .netrc file, and if those aren’t available it will prompt you to enter your username and password. We use a .netrc strategy here. A .netrc file is a text file located in our home directory that contains login information for remote machines. If you don’t have a .netrc file, login can create one for you.

earthaccess.login(strategy='interactive', persist=True)

auth = earthaccess.login()

EARTHDATA_USERNAME and EARTHDATA_PASSWORD are not set in the current environment, try setting them or use a different strategy (netrc, interactive)

You're now authenticated with NASA Earthdata Login

Using token with expiration date: 09/19/2023

Using .netrc file for EDL

Search for ICESat-2 Collections#

earthaccess leverages the Common Metadata Repository (CMR) API to search for collections and granules. Earthdata Search also uses the CMR API.

We can use the search_datasets method to search for ICESat-2 collections by setting keyword="ICESat-2" The argument passed to keyword can be any string and can include wildcard characters ? or *.

Note

To see a full list of search parameters you can type earthaccess.search_datasets?. Using ? after a python object displays the docstring for that object.

A count of the number of data collections (Datasets) found is given.

query = earthaccess.search_datasets(

keyword="ICESat-2",

)

Datasets found: 69

In this case, there are 69 datasets that have the keyword ICESat-2.

search_datasets returns a python list of DataCollection objects. We can view metadata for each collection in long form by passing a DataCollection object to print or as a summary using the summary method for the DataCollection object. Here, I use the pprint function to Pretty Print each object.

for collection in query[:10]:

pprint.pprint(collection.summary(), sort_dicts=True, indent=4)

print('') # Add a space between collections for readability

{ 'concept-id': 'C2120512202-NSIDC_ECS',

'file-type': "[{'FormatType': 'Native', 'Format': 'HDF5', "

"'FormatDescription': 'HTTPS'}]",

'get-data': [ 'https://n5eil01u.ecs.nsidc.org/ATLAS/ATL03.005/',

'https://search.earthdata.nasa.gov/search?q=ATL03+V005',

'http://openaltimetry.org/',

'https://nsidc.org/data/data-access-tool/ATL03/versions/5/'],

'short-name': 'ATL03',

'version': '005'}

{ 'cloud-info': { 'Region': 'us-west-2',

'S3BucketAndObjectPrefixNames': [ 'nsidc-cumulus-prod-protected/ATLAS/ATL03/005',

'nsidc-cumulus-prod-public/ATLAS/ATL03/005'],

'S3CredentialsAPIDocumentationURL': 'https://data.nsidc.earthdatacloud.nasa.gov/s3credentialsREADME',

'S3CredentialsAPIEndpoint': 'https://data.nsidc.earthdatacloud.nasa.gov/s3credentials'},

'concept-id': 'C2153572325-NSIDC_CPRD',

'file-type': "[{'FormatType': 'Native', 'Format': 'HDF5', "

"'FormatDescription': 'HTTPS'}]",

'get-data': ['https://search.earthdata.nasa.gov/search?q=ATL03+V005'],

'short-name': 'ATL03',

'version': '005'}

{ 'concept-id': 'C2144424132-NSIDC_ECS',

'file-type': "[{'FormatType': 'Native', 'Format': 'HDF5', "

"'FormatDescription': 'HTTPS'}]",

'get-data': [ 'https://n5eil01u.ecs.nsidc.org/ATLAS/ATL08.005/',

'https://search.earthdata.nasa.gov/search?q=ATL08+V005',

'https://openaltimetry.org/',

'https://nsidc.org/data/data-access-tool/ATL08/versions/5/'],

'short-name': 'ATL08',

'version': '005'}

{ 'cloud-info': { 'Region': 'us-west-2',

'S3BucketAndObjectPrefixNames': [ 'nsidc-cumulus-prod-protected/ATLAS/ATL08/005',

'nsidc-cumulus-prod-public/ATLAS/ATL08/005'],

'S3CredentialsAPIDocumentationURL': 'https://data.nsidc.earthdatacloud.nasa.gov/s3credentialsREADME',

'S3CredentialsAPIEndpoint': 'https://data.nsidc.earthdatacloud.nasa.gov/s3credentials'},

'concept-id': 'C2153574670-NSIDC_CPRD',

'file-type': "[{'FormatType': 'Native', 'Format': 'HDF5', "

"'FormatDescription': 'HTTPS'}]",

'get-data': ['https://search.earthdata.nasa.gov/search?q=ATL08+V005'],

'short-name': 'ATL08',

'version': '005'}

{ 'concept-id': 'C2144439155-NSIDC_ECS',

'file-type': "[{'FormatType': 'Native', 'Format': 'HDF5', "

"'FormatDescription': 'HTTPS'}]",

'get-data': [ 'https://n5eil01u.ecs.nsidc.org/ATLAS/ATL06.005/',

'https://search.earthdata.nasa.gov/search?q=ATL06+V005',

'https://openaltimetry.org/',

'https://nsidc.org/data/data-access-tool/ATL06/versions/5/'],

'short-name': 'ATL06',

'version': '005'}

{ 'cloud-info': { 'Region': 'us-west-2',

'S3BucketAndObjectPrefixNames': [ 'nsidc-cumulus-prod-protected/ATLAS/ATL06/005',

'nsidc-cumulus-prod-public/ATLAS/ATL06/005'],

'S3CredentialsAPIDocumentationURL': 'https://data.nsidc.earthdatacloud.nasa.gov/s3credentialsREADME',

'S3CredentialsAPIEndpoint': 'https://data.nsidc.earthdatacloud.nasa.gov/s3credentials'},

'concept-id': 'C2153572614-NSIDC_CPRD',

'file-type': "[{'FormatType': 'Native', 'Format': 'HDF5', "

"'FormatDescription': 'HTTPS'}]",

'get-data': ['https://search.earthdata.nasa.gov/search?q=ATL06+V005'],

'short-name': 'ATL06',

'version': '005'}

{ 'concept-id': 'C2565090645-NSIDC_ECS',

'file-type': "[{'FormatType': 'Native', 'Format': 'HDF5', "

"'FormatDescription': 'HTTPS'}]",

'get-data': [ 'https://n5eil01u.ecs.nsidc.org/ATLAS/ATL08.006/',

'https://search.earthdata.nasa.gov/search?q=ATL08+V006',

'https://openaltimetry.org/',

'https://nsidc.org/data/data-access-tool/ATL08/versions/6/'],

'short-name': 'ATL08',

'version': '006'}

{ 'cloud-info': { 'Region': 'us-west-2',

'S3BucketAndObjectPrefixNames': [ 'nsidc-cumulus-prod-protected/ATLAS/ATL08/006',

'nsidc-cumulus-prod-public/ATLAS/ATL08/006'],

'S3CredentialsAPIDocumentationURL': 'https://data.nsidc.earthdatacloud.nasa.gov/s3credentialsREADME',

'S3CredentialsAPIEndpoint': 'https://data.nsidc.earthdatacloud.nasa.gov/s3credentials'},

'concept-id': 'C2613553260-NSIDC_CPRD',

'file-type': "[{'FormatType': 'Native', 'Format': 'HDF5', "

"'FormatDescription': 'HTTPS'}]",

'get-data': ['https://search.earthdata.nasa.gov/search?q=ATL08+V006'],

'short-name': 'ATL08',

'version': '006'}

{ 'cloud-info': { 'Region': 'us-west-2',

'S3BucketAndObjectPrefixNames': [ 'nsidc-cumulus-prod-protected/ATLAS/ATL13/005',

'nsidc-cumulus-prod-public/ATLAS/ATL13/005'],

'S3CredentialsAPIDocumentationURL': 'https://data.nsidc.earthdatacloud.nasa.gov/s3credentialsREADME',

'S3CredentialsAPIEndpoint': 'https://data.nsidc.earthdatacloud.nasa.gov/s3credentials'},

'concept-id': 'C2153575088-NSIDC_CPRD',

'file-type': "[{'FormatType': 'Native', 'Format': 'HDF5', "

"'FormatDescription': 'HTTPS'}]",

'get-data': ['https://search.earthdata.nasa.gov/search?q=ATL13+V005'],

'short-name': 'ATL13',

'version': '005'}

{ 'concept-id': 'C2179092356-NSIDC_ECS',

'file-type': "[{'FormatType': 'Native', 'Format': 'HDF5', "

"'FormatDescription': 'HTTPS'}]",

'get-data': [ 'https://n5eil01u.ecs.nsidc.org/ATLAS/ATL13.005/',

'https://search.earthdata.nasa.gov/search?q=ATL13+V005',

'https://nsidc.org/data/data-access-tool/ATL13/versions/5/'],

'short-name': 'ATL13',

'version': '005'}

For each collection, summary returns a subset of fields from the collection metadata and Unified Metadata Model (UMM) entry.

concept-idis an unique identifier for the collection that is composed of a alphanumeric code and the provider-id for the DAAC.short-nameis the name of the dataset that appears on the dataset set landing page. For ICESat-2,ShortNamesare generally how different products are referred to.versionis the version of each collection.file-typegives information about the file format of the collection files.get-datais a collection of URL that can be used to access data, dataset landing pages, and tools.

For cloud-hosted data, there is additional information about the location of the S3 bucket that holds the data and where to get credentials to access the S3 buckets. In general, you don’t need to worry about this information because earthaccess handles S3 credentials for you. Nevertheless it may be useful for troubleshooting.

Note

In Python, all data are represented by objects. These objects contain both data and methods (think functions) that operate on the data. earthaccess includes DataCollection and DataGranule objects that contain data about collections and granules returned by search_datasets and search_data respectively. If you are familiar with Python, you will see that the data in each DataCollection object is organized as a hierarchy of python dictionaries, lists and strings. So if you know the dictionary key for the metadata entry you want you can get that metadata using standard dictionary methods. For example, to get the dataset short name from the example below, you could just use collection['meta']['concept-id']. However, in this example the `concept-id’ method for the DataCollection object returns the same information. Take a look at nsidc/earthaccess to see how this is done.

For the ICESat-2 search results the provider-id is NSIDC_ECS and NSIDC_CPRD. NSIDC_ECS is for collections archived at the NSIDC DAAC and NSIDC_CPRD is for the cloud-hosted collections.

For ICESat-2 short-name refers to the following products.

ShortName |

Product Description |

|---|---|

ATL03 |

ATLAS/ICESat-2 L2A Global Geolocated Photon Data |

ATL06 |

ATLAS/ICESat-2 L3A Land Ice Height |

ATL07 |

ATLAS/ICESat-2 L3A Sea Ice Height |

ATL08 |

ATLAS/ICESat-2 L3A Land and Vegetation Height |

ATL09 |

ATLAS/ICESat-2 L3A Calibrated Backscatter Profiles and Atmospheric Layer Characteristics |

ATL10 |

ATLAS/ICESat-2 L3A Sea Ice Freeboard |

ATL11 |

ATLAS/ICESat-2 L3B Slope-Corrected Land Ice Height Time Series |

ATL12 |

ATLAS/ICESat-2 L3A Ocean Surface Height |

ATL13 |

ATLAS/ICESat-2 L3A Along Track Inland Surface Water Data |

Search for cloud-hosted data#

If you only want to search for data in the cloud, you can set cloud_hosted=True.

Query = earthaccess.search_datasets(

keyword = 'ICESat-2',

cloud_hosted = True,

)

Datasets found: 29

Search a data set using spatial and temporal filters#

Once, you have identified the dataset you want to work with, you can use the search_data method to search a data set with spatial and temporal filters. As an example, we’ll search for ATL06 granules over the Juneau Icefield, AK, for March and April 2020.

Either concept-id or short-name can be used to search for granules from a particular dataset. If you use short-name you also need to set version. If you use concept-id, this is all that is required because concept-id is unique.

The temporal range is identified with standard date strings, and latitude-longitude corners of a bounding box is specified. Polygons and points, as well as shapefiles can also be specified.

This will display the number of granules that match our search.

results = earthaccess.search_data(

short_name = 'ATL06',

version = '006',

cloud_hosted = True,

bounding_box = (-134.7,58.9,-133.9,59.2),

temporal = ('2020-03-01','2020-04-30'),

count = 100

)

Granules found: 4

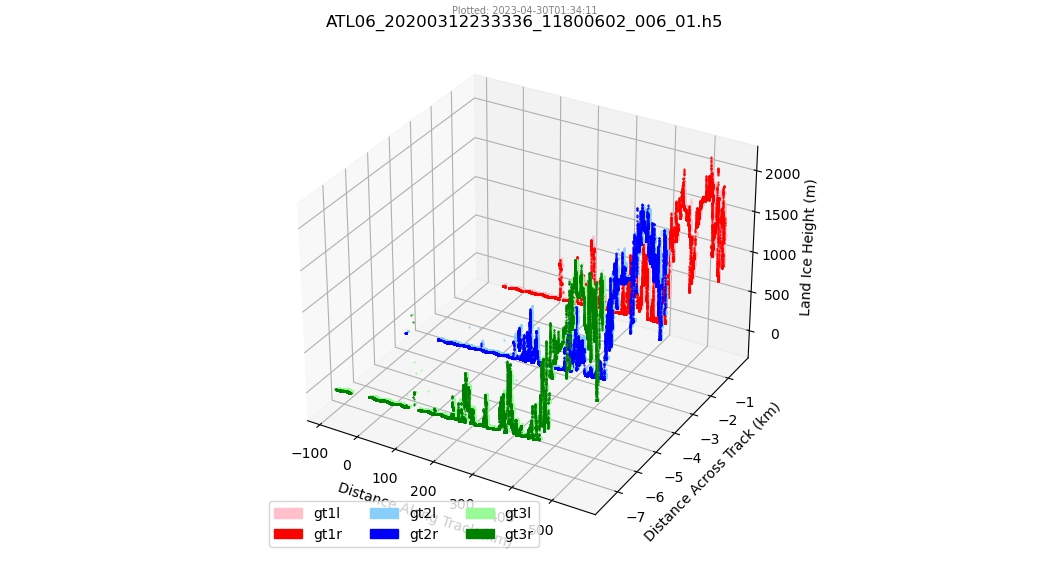

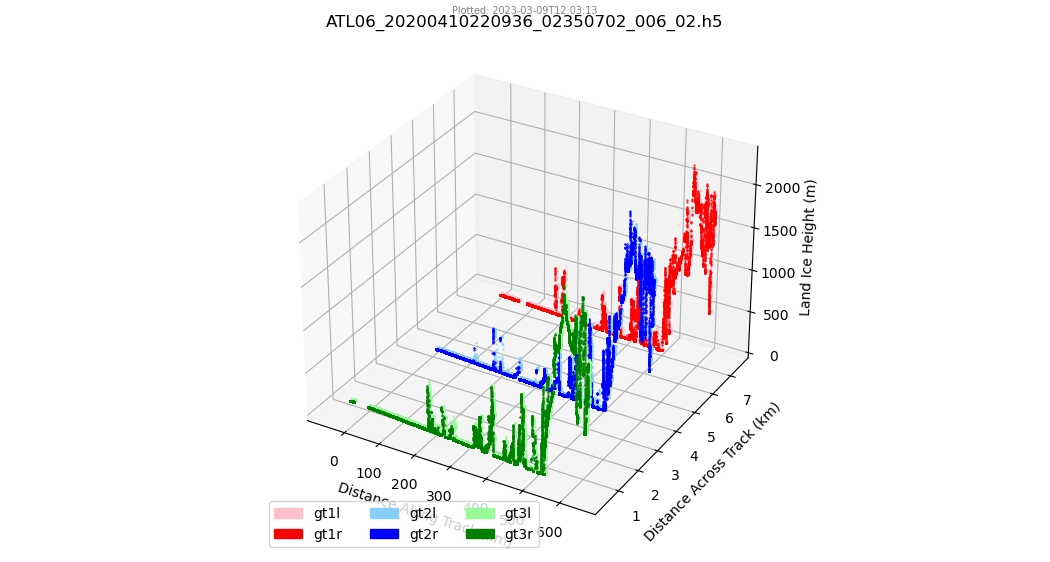

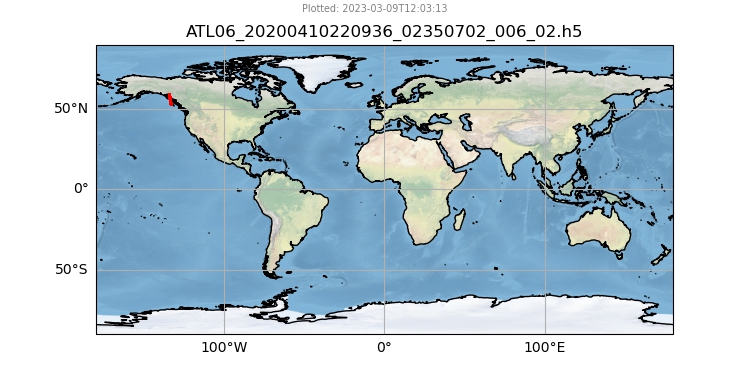

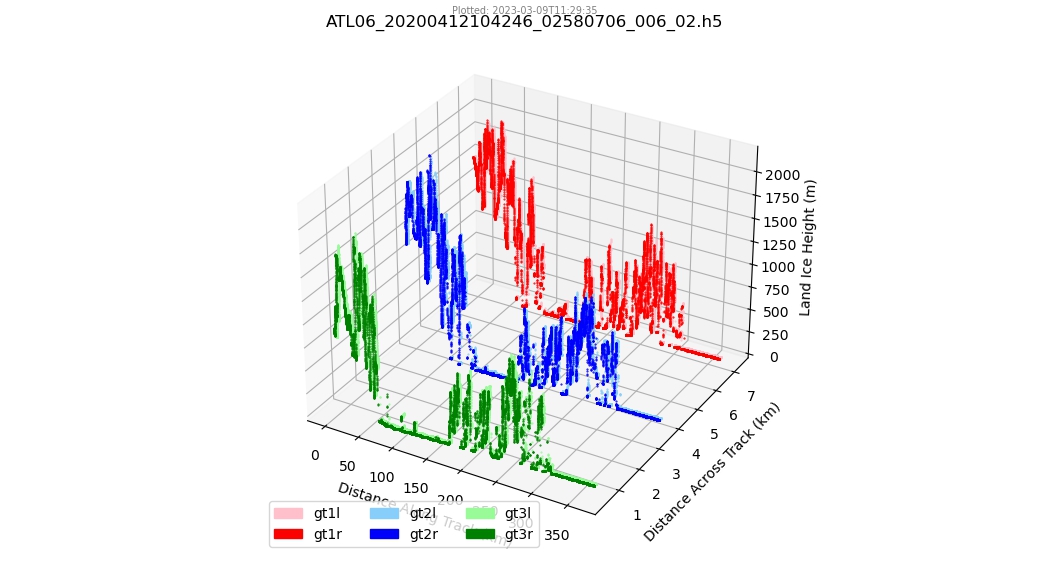

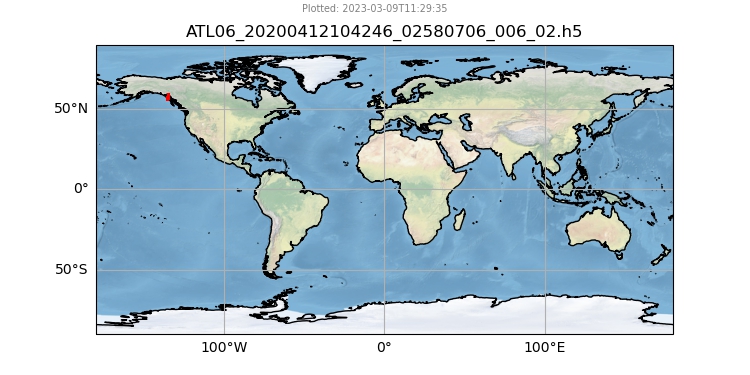

We’ll get metadata for these 4 granules and display it. The rendered metadata shows a download link, granule size and two images of the data.

The download link is https and can be used download the granule to your local machine. This is similar to downloading DAAC-hosted data but in this case the data are coming from the Earthdata Cloud. For NASA data in the Earthdata Cloud, there is no charge to the user for egress from AWS Cloud servers. This is not the case for other data in the cloud.

[display(r) for r in results]

Data: ATL06_20200310121504_11420606_006_01.h5

Size: 0 MB

Spatial: {'HorizontalSpatialDomain': {'Geometry': {'GPolygons': [{'Boundary': {'Points': [{'Longitude': -134.3399, 'Latitude': 59.03152}, {'Longitude': -134.44371, 'Latitude': 59.03709}, {'Longitude': -134.75456, 'Latitude': 57.4161}, {'Longitude': -134.6551, 'Latitude': 57.41076}, {'Longitude': -134.3399, 'Latitude': 59.03152}]}}]}}}

Data: ATL06_20200312233336_11800602_006_01.h5

Size: 0 MB

Spatial: {'HorizontalSpatialDomain': {'Geometry': {'GPolygons': [{'Boundary': {'Points': [{'Longitude': -134.41459, 'Latitude': 59.54887}, {'Longitude': -134.63538, 'Latitude': 59.53739}, {'Longitude': -134.59212, 'Latitude': 59.32438}, {'Longitude': -134.2125, 'Latitude': 57.38652}, {'Longitude': -133.7114, 'Latitude': 54.60434}, {'Longitude': -133.55731, 'Latitude': 53.68969}, {'Longitude': -133.36771, 'Latitude': 53.70055}, {'Longitude': -133.5178, 'Latitude': 54.61543}, {'Longitude': -134.00456, 'Latitude': 57.3981}, {'Longitude': -134.37249, 'Latitude': 59.33607}, {'Longitude': -134.41459, 'Latitude': 59.54887}]}}]}}}

Data: ATL06_20200410220936_02350702_006_02.h5

Size: 0 MB

Spatial: {'HorizontalSpatialDomain': {'Geometry': {'GPolygons': [{'Boundary': {'Points': [{'Longitude': -134.28339, 'Latitude': 59.54228}, {'Longitude': -134.50433, 'Latitude': 59.53072}, {'Longitude': -134.46626, 'Latitude': 59.34436}, {'Longitude': -133.988, 'Latitude': 56.87365}, {'Longitude': -133.46142, 'Latitude': 53.88745}, {'Longitude': -133.34478, 'Latitude': 53.18986}, {'Longitude': -133.15724, 'Latitude': 53.20085}, {'Longitude': -133.27098, 'Latitude': 53.8986}, {'Longitude': -133.78272, 'Latitude': 56.88519}, {'Longitude': -134.24631, 'Latitude': 59.35609}, {'Longitude': -134.28339, 'Latitude': 59.54228}]}}]}}}

Data: ATL06_20200412104246_02580706_006_02.h5

Size: 0 MB

Spatial: {'HorizontalSpatialDomain': {'Geometry': {'GPolygons': [{'Boundary': {'Points': [{'Longitude': -134.62761, 'Latitude': 59.53143}, {'Longitude': -134.84844, 'Latitude': 59.54238}, {'Longitude': -134.8761, 'Latitude': 59.3961}, {'Longitude': -135.19822, 'Latitude': 57.71464}, {'Longitude': -135.43039, 'Latitude': 56.42147}, {'Longitude': -135.46534, 'Latitude': 56.22639}, {'Longitude': -135.26355, 'Latitude': 56.21509}, {'Longitude': -135.22779, 'Latitude': 56.41013}, {'Longitude': -134.98845, 'Latitude': 57.70329}, {'Longitude': -134.65611, 'Latitude': 59.38441}, {'Longitude': -134.62761, 'Latitude': 59.53143}]}}]}}}

[None, None, None, None]

Use Direct-Access to open, load and display data stored on S3#

Direct-access to data from an S3 bucket is a two step process. First, the files are opened using the open method. This first step creates a Python file-like object that is used to load the data in the second step.

Authentication is required for this step. The auth object created at the start of the notebook is used to provide Earthdata Login authentication and AWS credentials “behind-the-scenes”. These credentials expire after one hour so the auth object must be executed within that time window prior to these next steps.

Note

The open step to create a file-like object is required because AWS S3, and other cloud storage systems, use object storage but most HDF5 libraries work with POSIX-compliant file systems. POSIX stands for Portable Operating System Interface for Unix and is a set of guidelines that include how to interact with files and file systems. Linux, Unix, MacOS (which is Unix-like), and Windows are POSIX-compliant. Critically, POSIX-compliant systems allows blocks of bytes, or individual bytes to be read from a file. With object storage the whole file has to be read. To get around this limitation, an intermediary is used, in this case s3fs. This intermediary creates a local POSIX-compliant virtual file system. S3 objects are loaded into this virtual file system so they can be accessed using POSIX-style file functions.

In this example, data are loaded into an xarray.Dataset. Data could be read into numpy arrays or a pandas.Dataframe. However, each granule would have to be read using a package that reads HDF5 granules such as h5py. xarray does this all under-the-hood in a single line but only for a single group in the HDF5 granule, in this case land ice heights for the gt1l beam*.

*ICESat-2 measures photon returns from 3 beam pairs numbered 1, 2 and 3 that each consist of a left and a right beam

%time

files = earthaccess.open(results)

ds = xr.open_dataset(files[1], group='/gt1l/land_ice_segments')

CPU times: user 3 µs, sys: 0 ns, total: 3 µs

Wall time: 5.48 µs

Opening 4 granules, approx size: 0.0 GB

ds

<xarray.Dataset>

Dimensions: (delta_time: 24471)

Coordinates:

* delta_time (delta_time) datetime64[ns] 2020-03-12T23:40:48.24...

latitude (delta_time) float64 ...

longitude (delta_time) float64 ...

Data variables:

atl06_quality_summary (delta_time) int8 ...

h_li (delta_time) float32 ...

h_li_sigma (delta_time) float32 ...

segment_id (delta_time) float64 ...

sigma_geo_h (delta_time) float32 ...

Attributes:

Description: The land_ice_height group contains the primary set of deriv...

data_rate: Data within this group are sparse. Data values are provide...hvplot is an interactive plotting tool that is useful for exploring data.

ds['h_li'].hvplot(kind='scatter', s=2)

Additional resources#

For general information about NSIDC DAAC data in the Earthdata Cloud:

FAQs About NSIDC DAAC’s Earthdata Cloud Migration

NASA Earthdata Cloud Data Access Guide

Additional tutorials and How Tos:

Bonus Material: Create a Data Citation#

Following ESIP Data Citation Guidelines the following information should be included in a data citation:

Author or creator

Public release date and maybe date dataset was last updated

Official Title of the dataset

Resolvable Persistent identifier: e.g. DOI

Version ID

Repository

Access date

Not all of these fields may be available. But assuming they are available we can automate create a citation. I’m going to use the BibTex format.

For information on BibTex citations see this wiki

import datetime as dt

def parse_collection_citations(collection):

"""Parses the CollectionsCitations entry in UMM"""

ref = collection.get_umm("CollectionCitations")

if len(ref) > 1:

warnings.warn("More than one entry in CollectionCitations\nReturning first entry.")

return {field.lower(): ref[0].get(field) for field in ["Publisher", "Title", "Version"]}

def get_doi(collection):

return collection.get_umm("DOI")

def get_names(contact):

names = [contact.get(field) for field in ['FirstName', 'MiddleName', 'LastName']]

return [name.strip() for name in names if name is not None]

def format_names(names):

return ' '.join([name if ' ' not in name else "{"+name+"}" for name in names])

def get_author_list(collection):

"""Generates an author list from Technical Contacts in ContactPersons

collection : A umm

returns : a BibTex formatted author list

"""

authors = []

for contact in collection.get_umm("ContactPersons"):

if 'Technical Contact' in contact["Roles"]:

author = format_names(get_names(contact))

if author != "NSIDC User Services":

authors.append(author)

return {'authors': ' and '.join(authors)}

def to_datetime(datestr):

if datestr.endswith('Z'):

date = dt.datetime.fromisoformat(datestr[:-1])

else:

date = dt.datetime.fromisoformat(datestr)

return date

def get_release_dates(collection):

"""Returns the release date and the date last updated, if present"""

dates = collection.get_umm("MetadataDates")

thiskey = {'CREATE': 'year', 'UPDATE': 'last_updated'}

result = {}

for r in dates:

date = r.get('Date')

date_type = r.get('Type')

result[thiskey[date_type]] = to_datetime(date).strftime("%Y-%m")

return result

def get_accessed():

"""Returns date string for today"""

return {"accessed": dt.datetime.today().strftime("%Y-%m-%d")}

def get_url(collection):

"""Returns dataset landing page if it exists"""

related_urls = collection.get_umm("RelatedUrls")

return {"url": url["URL"] for url in related_urls if url["Type"] == "DATA SET LANDING PAGE"}

def make_citation_key(collection):

"""Generates a meaningful citation key"""

return f"dataset:{collection.get_umm('ShortName')}-{collection.version()}"

def make_dataset_citation(collection):

"""Generates a BibTex @misc citation for a dataset from a collection query"""

citation = {

**parse_collection_citations(collection),

**get_doi(collection),

**get_author_list(collection),

**get_release_dates(collection),

**get_url(collection),

**get_accessed(),

}

citation_str = (

"@misc{" + make_citation_key(collection) + ",\n" +

",\n".join([f' {key} = "{value}"' for key, value in citation.items()])

)

return citation_str #parse_collection_citations(collection)

citation = make_dataset_citation(collection)

print(citation)

@misc{dataset:ATL13-005,

publisher = "NASA National Snow and Ice Data Center Distributed Active Archive Center",

title = "ATLAS/ICESat-2 L3A Along Track Inland Surface Water Data V005",

version = "005",

DOI = "10.5067/ATLAS/ATL13.005",

authors = "Michael F Jasinski and Jeremy D Stoll and David Hancock and John Robbins and Jyothi Nattala and Jamie Morison and Benjamin M. Jones and Michael E Ondrusek and Tamlin M. Pavelsky and Christopher Parrish and the {ICESat-2 Science} Team",

year = "2021-12",

last_updated = "2023-05",

url = "https://doi.org/10.5067/ATLAS/ATL13.005",

accessed = "2023-07-29"